Sensing Cognitive Multitasking for a Brain-Based Adaptive User Interface

Erin Treacy Solovey, Francine Lalooses, Krysta Chauncey, Douglas Weaver, Margarita Parasi, Matthias Scheutz, Angelo Sassaroli, Sergio Fantini, Paul Schermerhorn, Audrey Girouard, Robert J.K. Jacob

Presented at CHI 2011, May 7-12, 2011, Vancouver, British Columbia, Canada

Author Bios

- Erin Treacy Solovey is a PhD candidate at Tufts University with an interest in reality-based interaction systems to enhance learning.

- Francine Lalooses is a graduate student at Tufts University who studies brain-computer interaction.

- Krysta Chauncey is a Post-Doc at Tufts University with an interest in brain-computer interaction.

- Douglas Weaver is a Master's student at Tufts University working with adaptive brain-computer interfaces.

- Margarita Parasi graduated from Tufts University and is now a Junior Software Developer at Eze Castle.

- Matthias Scheutz is the Director of Tufts University's Human-Robot Interaction Lab.

- Angelo Sassaroli is a Research Assistant Professor at Tufts University and received is doctorate from the University of Electro-Communication.

- Sergio Fantini is a Professor of Biomedical Engineering at Tufts University, with researhc in biomedical optics.

- Paul Schermerhorn is affiliated with Tufts University.

- Audrey Girouard received her PhD from Tufts University and is now an Assistant Professor at Carleton University's School of Information Technology.

- Robert J.K. Jacob is a Professor of Computer Science at Tufts University with research in new interaction modes.

Summary

Hypothesis

Can a non-invasive functional near-infrared spectroscopy (fNIRS) help with the design of user interfaces with respect to multitasking? How does it compare to a prior study that used a different system?

Methods

Their preliminary study used fNIRS in comparsion to the functional MRI study. The hope was to distinguish brain states as fMRI did. The design paradigm besides the sensors was identical. The authors used leave-one-out cross validation to classify the data, which then had noise removed.

The second set of experiments involved human-robot cooperation. The human monitored the robot's status and sorted rocks found on Mars by the robot. The information from the robot required a human response for each rock found. The Delay task involved checking for immediate consecutive classification messages. Dual-Task checked if successive messages were of the same type. Branching required the Delay process for rock classification messages and Dual Task for location messages. The tasks were presented in psuedo-random order and repeated until 80% accuracy was achieved. Then the fNIRS sensors were placed on the forehead to get a baseline measure. The user then completed ten trials for each of the three conditions.

The authors then tested if they could distinguish variations on branching. Random branching presented messages at random, while Predictive presented rock messages for every three stimuli. The procedures were otherwise identical to the preceding test.

The final test used branching and delay tasks to indicate branching and non-branching. The system was trained using the data from that test and then classified new data based on the training results.

Results

The preliminary study was about 68.4% successful in three pairwise classifications and 52.9% successful for three-way classification, suggesting that fNIRS could be used for further studies.

For the first test of the robot task, with users who performed at less than 70% accuracy removed from consideration, data was classified into normal and non-Gaussian distributions. Normal distributions were analyzed with repeated measuremeants one-way ANOVA, and the latter used non-parametric repeated measurements ANOVA. Accuracy and response time did not have a significant correlation. No learning effect was found. Total hemoglobin was higher in the branching condition.

The next test found no statistically significant difference in response times or accuracy. The correlation between these two was significant for predictive branching. Deoxygenated hemoglobin levels were higher in random branching for the first half of the trials, so this can be used to distinguish between the types of tasks.

In the last test, the system correctly distinguished between task types and adjusted its mode.

Contents

Multitasking is difficult for humans unless the tasks allow for integration of data into each other. Repeated task switching can lead to lower accuracy, increased duration, and increased frustration. Past works have measured mental workload, through means like pupil dilation and blood pressure. Others measured interruptibility costs based on different inputs, such as eye tracking or desktop activity. One study found three multitasking scenarios: branching, dual-task, and delay. The first involves a tree of tasks, the second involves unrelated tasks, and the last involves delay tasks until later. Functional MRI (fMRI) was used for that study and could distinguish between the tasks.

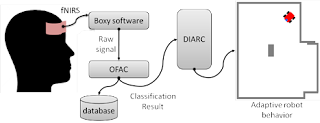

Non-invasive brain-sensing systems can help to enhance researcher's understanding of the mind during tasks. The authors' system uses functional near-infrared spectroscopy to distinguish between four states that occur with multitasking. Their goal is to use this system to assist in the development of user interfaces. They chose a human-robot team scenario to understand cognitive multitasking in UIs. These tasks require the human to perform a task while monitoring the robot.

The authors developed a system that can adjust a UI on the fly depending on the type of task being performed. The system can adjust the robot's goal structure and also has a debug mode. When a branching state appears, the robot autonomously moves to new locations; otherwise it returns to the default state.

Discussion

The authors wanted to find out if fNIRS could distinguish between tasks to adjust user interfaces for better multitasking. Their multiple tests and proof-of-concept certainly suggest that this is a possible way to enhance multitasking.

I am concerned that this innovation will lead to yet another level of complexity when designing user interfaces, since we now have to classify possible scenarios we think will occur. If this could be automated somehow, that would be beneficial.

The authors found one way to distinguish between tasks, but I imagine that there are far more. Future work might find one that is even less obtrusive than external sensors.

this is wonderful and nice post. The system can adjust the robot's goal structure and also has a debug mode. Functional Near Infrared Spectroscopy

ReplyDelete