Embodiment in Brain-Computer Interaction

Kenton O'Hara, Abigail Sellen, Richard Harper

Presented at CHI 2011, May 7-12, 2011, Vancouver, British Columbia, Canada

Author Bios

- Kenton O'Hara is Senior Researcher in the Socio Digital Systems Group at Microsoft Research in Cambridge. He studies Brain-Computer Interaction.

- Abigail Sellen is a Principal Researcher at Microsoft Research Cambridge and co-manages the Socio-Digital Systems group. She holds a PhD in Cognitive Science from the University of California at San Diego.

- Richard Harper is a Principal Researcher at Microsoft Research Cambridge and co-manages the Socio-Digital Systems group. He has published over 120 papers.

Summary

Hypothesis

What are the possibilities and constraints of Brain-Computer Interaction in games and as a reflection of society?

Methods

The study used MindFlex. Participants were composed of groups of people who knew each other and were assembled through a host participant. The hosts were given a copy of the game to take home and play at their discretion. Each game was videorecorded. Groups were fluid. The videos were analyzed for physical behavior to explain embodiment and social meaning in the game.

Results

Users deliberately adjusted their posture to relax and gain focus. These responses were dynamic based on how the game behaved. Averting gaze typically was used to lower concentration. Users sometimes physically turned away to achieve the correct game response. Despite the lack of visual feedback, the decreased whirring of fans indicated the game state. The players created a narrative fantasy on top of the game's controls to explain what was occuring, adding an extra source of mysticism and engagement. The narrative is not necessary to control the game, but is still prevalent even when it is contrary to the function of the game (eg. thinking about lowering the ball increases concentration). Embodiment plays a key role in mental tasks.

How and why people watched the game was not entirely clear, especially when players did not physically manifest their actions. In one case, when a watcher attempted to give instructions to the player, the lack of a response from the player prompted further instructions, which distracted the player too much. The watcher interpreted the player's mind from the game effects, which can be misinterpreted. Players sometimes volunteered their mindset through gestures or words. Sometimes these were a performance for the audience. The spectatorship was a two-way relationship, with the audience interacting with the players. These tended to be humerous remarks to--and about-- the player and could be purposefully helpful or detrimental. The nature of the interaction reflected real-world relationships between people.

Contents

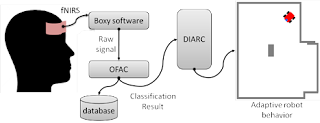

Advances in non-invasive Brain-Computer Interaction (BCI) allows for its wider usage in new applications. Recently, commercial products using BCI have appeared, but the design paradigms and constraints for it are unknown. Initial work in BCI was largely philosophical but later moved towards the observable. The nature of embodiment suggests that understanding BCI relies on understanding actions in the physical world. These actions influence BCI readings and social meaning.

Typical prior studies used BCI as a simple control or took place in a closed environment. One study took place outside of a lab and suggested that the environmental context allows for further ethnographic work. One study worried less about efficacy and more about the experience. These studies noted that the games were a social experience.

To explore embodiment, the authors used a BCI-based game called MindFlex. MindFlex is a commercially-available BCI game that uses a wireless headset with a single electrode. It uses EEG to measure relative levels of concentration, with higher concentration increases the height of the ball and lower concentration lowering it through a fan.

The authors recommended that a narrative that maps correctly to controls should be used. Physical interaction paradigms could be deliberately beneficial or detrimental, depending on the desired effect.

Discussion

The authors wanted to discover possible features and problems with BCI. Their study identified a few of these, but this paper felt far too much like preliminary results for me to agree that these are the only options and flaws. I am convinced that the things the authors identified fall into the categories for constraints and uses, but it is uncertain about how representative their findings are of the entire set of each.

I had not considered the design paradigms behind BCI games before reading this paper, so the reflection of social behavior found in them was particularly interesting.

I would like to see more comprehensive data on how social relationships factor into the playing of the games. The anecdotes provided by the authors were interesting, but the lack of statistics limited the ability to determine the frequency with which identified behaviors occurred.